Ad-hoc Voice Query Application

Capstone II Project

Source: https://bitbucket.org/cps491s19-team1/cps491-scrum-team1.git

Live: https://cps491-team1.myvano.com:8080/ (Username: dev, Password: 491squad)

University of Dayton

Department of Computer Science

CPS 491 - Capstone II, Spring 2019

Instructor: Dr. Phu Phung

Team Members

- Vince Belanger, belangerv1@udayton.edu

- Gustavo Van Overberghe, vanoverbergheg1@udayton.edu

- Stephanie D’Souza, dsouzas1@udayton.edu

- Ryan Farrar, farrarr1@udayton.edu

Overview

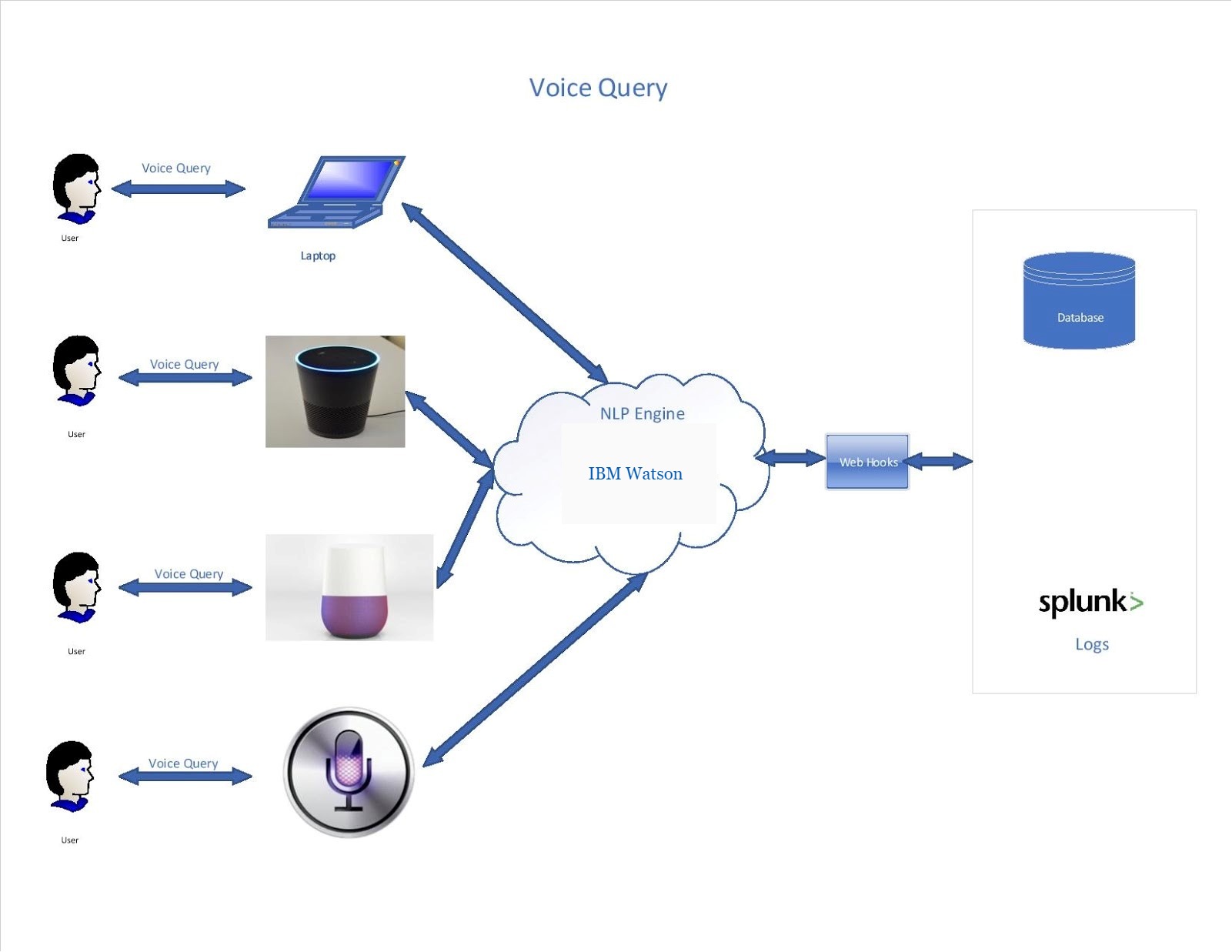

The below diagram is a visualization of the data flow for in the final version of our project. Each layer is represented here which includes the users vocal input, the reception of that audio by a machine, the translation of that voice to text through an API call. Following this, the text will be analyzed by a Natural Language Processor with the intent to pull out key words to formulate a query. The query will then be sent through web hooks to a database that the information will be pulled from.

Figure 1 - Overview of sequence of the VQL

Project Context and Scope

Using an existing voice-to-text library, we will develop an ad-hoc voice query program that allows users to retrieve financial information from various sources by speaking to the program. We plan to develop for a web environment at first, and if we are on track for the semester we will also develop a mobile application.

Synchrony expressed interest in a possible final solution called a “voice query language”, which would be a detailed mapping of English words to SQL, essentially becoming a query language of its own. This is not a part of our project’s scope, but we hope to build the foundations for such a system in the event that Synchrony wishes to develop a full-fledged “Voice Query Language” based on our work.

Acknowledgements

Thank you to Synchrony and our mentors for their help in this project.